Study finds systematic wave-like pattern of dopamine’s signaling in reward-based learning

New research out of the Carney Institute for Brain Science identifies how dopamine is transmitted throughout the brain during reward-based learning, providing a new take on traditional understanding of dopamine neurotransmission.

The paper, published in Cell, shows how regions of the brain receive targeted dopamine signals. The wave-like signaling patterns relate the amount of dopamine received by one area of the brain that may be more specifically correlated with reward-based behaviors to the dopamine received by other areas of the brain.

“We were able to discover that the large territories of the striatum — a region deep in the forebrain — end up getting coordinated waves of dopaminergic signals,” said Arif Hamid, the first author of the paper, who is completing a postdoctoral fellowship at Brown University and is a Howard Hughes Medical Institute Gray fellow.

According to Hamid, dopamine cells were previously known to project profusely towards the front part of the brain. “Each dopamine cell in the mouse, for example, contacts 2 million cells or so,” he said. “This impressive divergence could be an ideal architecture for broadcasting signals globally, like a megaphone.”

A longstanding view in the field of neuroscience was that in response to rewarding stimuli, the neurotransmitter dopamine would deliver signals blasted everywhere in the brain, according to co-senior author Christopher Moore.

“There have been hints that dopamine isn't this monolithic signaling molecule,” said Moore, who is a professor of neuroscience at Brown University and the associate director of the Carney Institute for Brain Science. “What hadn’t been found yet was that there was a systematicity to its patterning.”

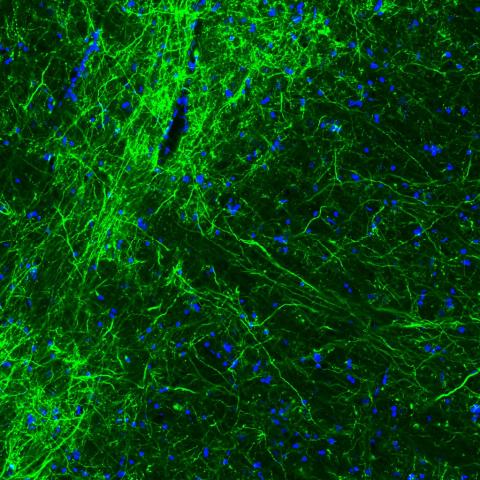

The paper also studied the functional role of these wave patterns through a mix of empirical experiments studying dopamine signaling in mice and computational modeling. As such, this project was made possible by a collaboration between Moore’s experimental laboratory and the theoretical approach of Michael Frank, Edgar L. Marston Professor of Cognitive, Linguistic & Psychological Sciences.

The researchers deciphered the activation patterns of dopamine in the context of its two main functions, Hamid said. “Dopamine drives motivation — it gets us fired up to pursue our current goals — but it also simultaneously allows us to learn from the consequences of our actions now so that we can kind of decide or choose better in the future, and ultimately master our choices, actions and thoughts” he said.

Dopamine activity in the brain is specifically modulated by situations that produce a reward prediction error, the researchers said. Reward prediction errors occur when the reward for a behavior doesn’t match an individual's prediction, and this activates the dopamine system in the brain, the researchers said.

“We indeed saw evidence for dopamine transients resembling reward prediction errors,” said Frank, co-senior author on the paper and director of the Center for Computational Brain Science. “But these were embedded within a richer set of dynamics that also included traveling waves, as well as ramps of dopamine within striatal subregions while an animal is pursuing a reward.”

“So we built a model that ties together these various dopamine dynamics in the service of a larger goal: allowing the animal to infer when they are in control over rewarding events, and to then assign ‘credit’ to the corresponding brain regions that facilitate adaptive behavior,” Frank added.

Building from this expanded framework, the researchers said they wanted to address a gap in the field. “We never really understood how dopamine arrives at different parts of the brain, and how such patterns help animals learn the relationship between their actions and rewarding outcomes,” Hamid said.

The behavioral experiments allowed the researchers to test the dopamine dynamics when the mice were in control of getting the reward compared to when they experienced the same sensory and rewarding events that did not require their control. The researchers found that the directionality of both dopamine ramps while the animal was pursuing a reward, and the direction of the waves during the rewards themselves, varied depending on the animal’s level of control.

When the animal needed to work to receive rewards, dopamine in a striatum subregion ramped up, and the more it did so, the more the reward induced a wave that originated from that region, which in turn predicted the animal’s future behavior. When events were outside the animal’s control, the ramps and waves went in the opposite direction, and the animal stopped working. This pattern is consistent with the credit assignment model that the authors advanced.

These analyses helped the researchers understand the neural mechanisms underlying how an animal learns if it's in control of its world in terms of reward pursuit, and how dopamine facilitates the learning process, Hamid said.

The microscopy work was paired with theory in a cyclical fashion — it wasn’t simply that the models informed the experiments, and led to the final answer, but rather that both were used to inform the other.

“We were able to merge the experimental evidence with really cutting edge computational models of how the system should effectively work to go in and test very specific predictions of the model itself,” Hamid said.

Breaking down aspects of how the brain's dopamine activity patterns may actually cause the functional effects of predictive learning has already catalyzed new research questions, which the researchers will continue to study in the years to come. They said they hope that future projects will uncover how exactly these waves come about mechanistically.